Table of Contents

Why Use Replication across AWS S3 bucket?

Replication between S3 buckets enables automatic copying of the objects as they are written into the bucket. Replication helps us achieve the following:

- Store objects into different storage classes for different types of application needs.

- Replicate objects while retaining metadata.

- Keep objects stored over multiple AWS Regions for High Availability.

- Maintain object copies under different ownership.

Prerequisites For Replication

To Replicate objects across S3 buckets, the following are required:

- A source bucket where the objects are written initially. The owner of the source bucket must have the source and destination AWS Regions enabled for their account.

- A destination bucket where the objects will be replicated. The owner of the destination bucket must have the destination Region enabled for their account.

- The source and destination buckets can be in the same account also.

- Both source and destination buckets must have versioning enabled.

- Amazon S3 must have permissions to replicate objects from the source bucket to the destination bucket or buckets on your behalf.

- If the owner of the source bucket doesn’t own the object in the bucket, the object owner must grant the bucket owner READ and READ_ACP permissions with the object access control list (ACL).

- If the source bucket has S3 Object Lock enabled, the destination buckets must also have S3 Object Lock enabled.

- To enable replication on a bucket that has Object Lock enabled, you must use the AWS Command Line Interface, REST API, or AWS SDKs.

Replication Types

Multiple types of replications can be used based on the business requirements.

- Same-Region Replication

The objects from one S3 bucket can be replicated into another S3 bucket in the same region within the same or different account.

- Cross-Region Replication

The objects from one S3 bucket can be replicated into another S3 bucket in a different region within the same or a different account.

- Two-Way Replication

When there are two buckets A and B, the objects written in either bucket can be replicated into the other bucket.

- S3 Batch Replication

All the replication methods mentioned above can replicate the objects instantly with or without any filters.

Batch replication is an on-demand job that can be used when only a particular set of objects can be replicated. For example, only the previously failed objects are to be replicated at a given time.

Setting Up Replication

Let us learn about S3 replication with an example of same-region replication within the same account.

Source Bucket

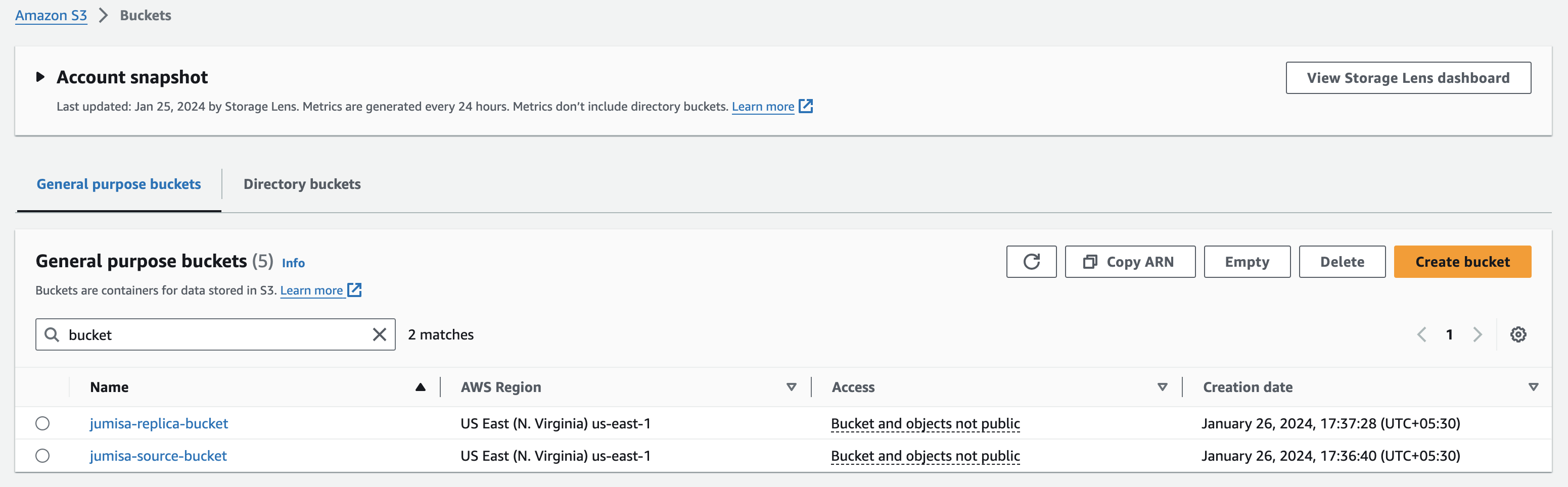

- Let us create an S3 bucket in the region of N Virginia. The bucket type is General purpose.

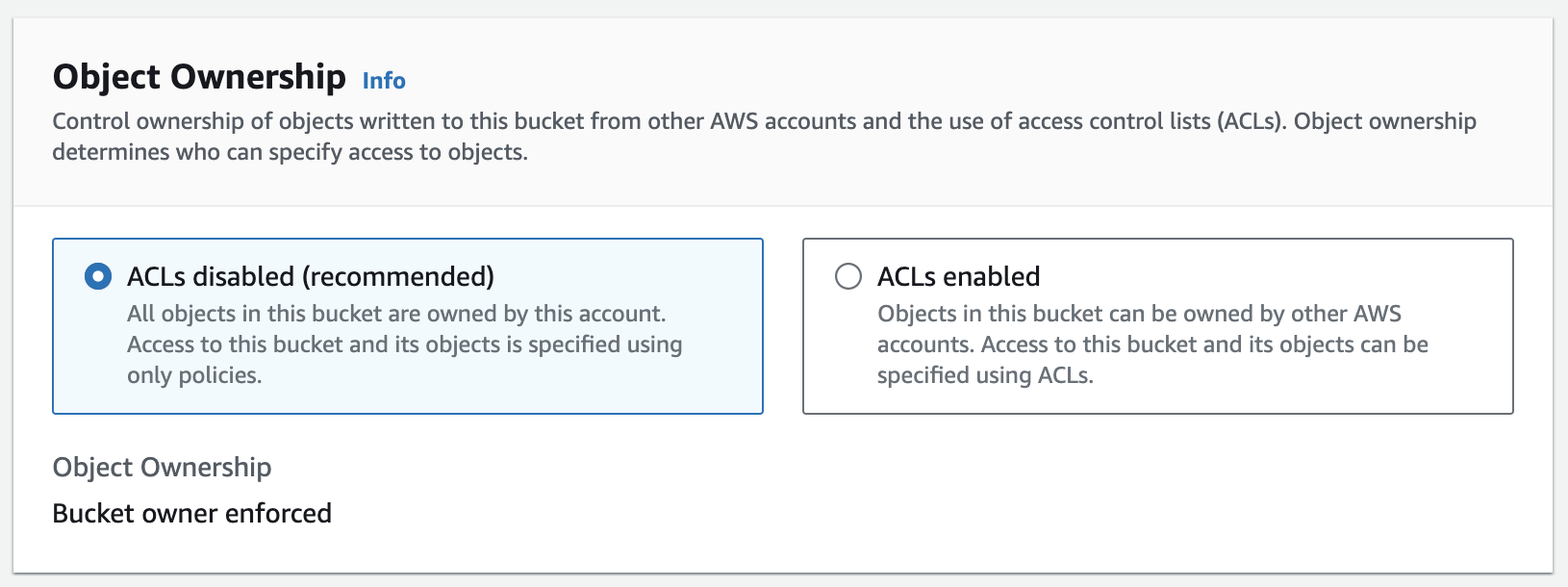

- Since we are going to replicate the objects across two buckets in the same region, we can choose the ACLs disabled for object ownership. By choosing this option, the objects in this bucket are owned by this account. Access to this bucket and its objects is specified using only policies.

- Public access can be blocked for now as we are going to access the objects within the AWS network only.

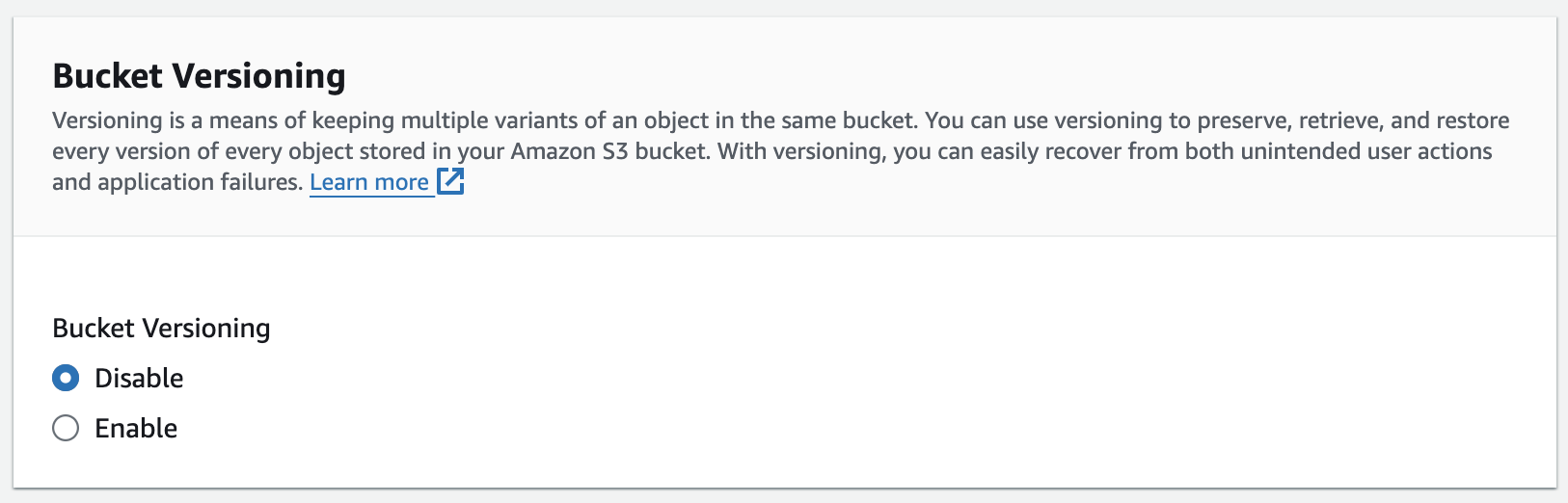

- Bucket versioning must be enabled to replicate the objects.

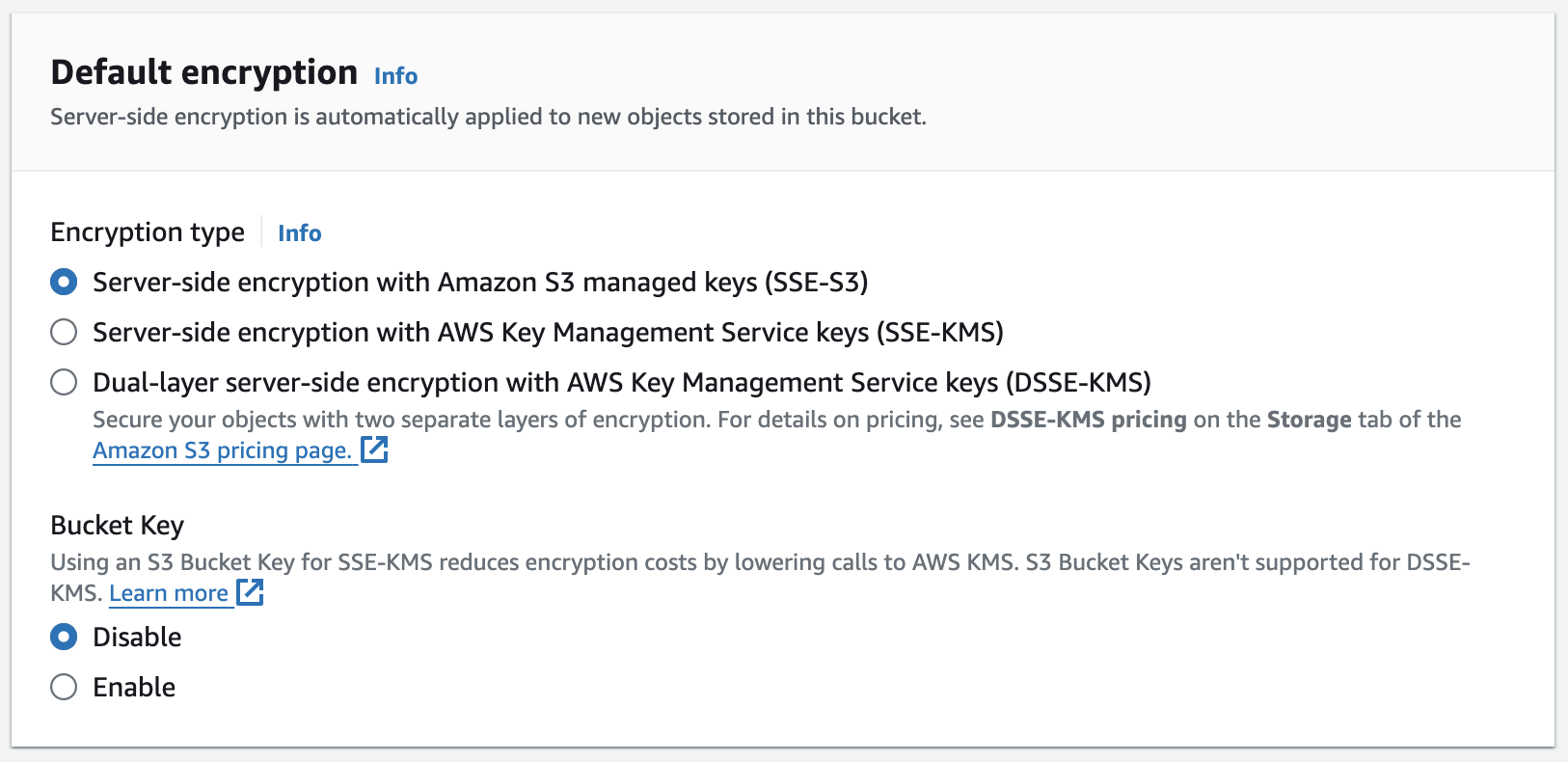

- Replication can be done for the unencrypted objects, objects encrypted using customer-provided keys (SSE-C), objects encrypted at rest under an Amazon S3 managed key (SSE-S3) or a KMS key stored in AWS Key Management Service (SSE-KMS).

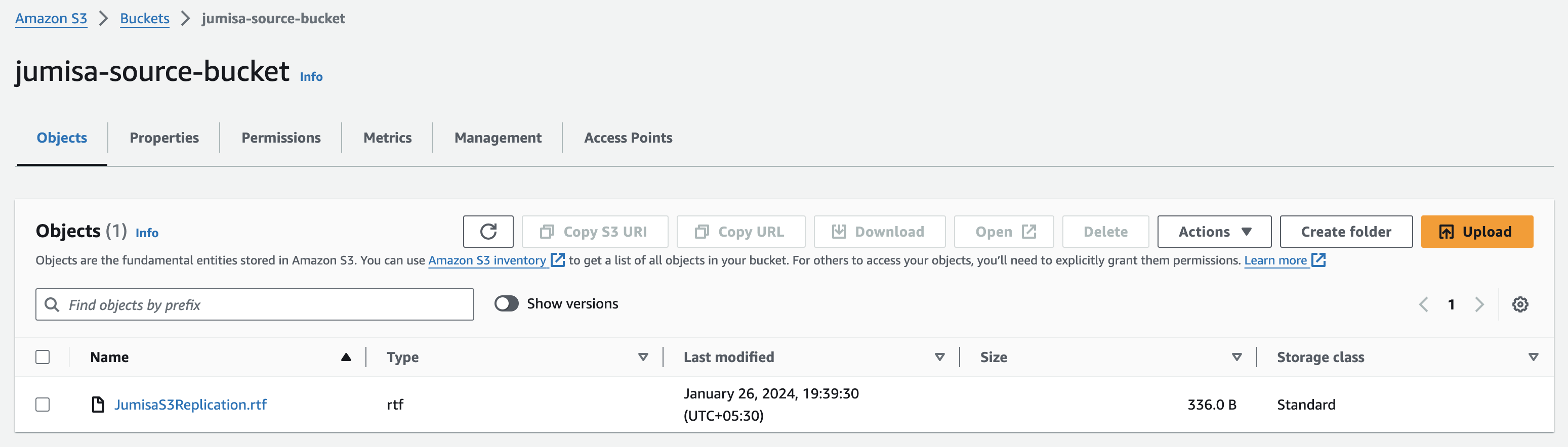

- Once the bucket is created, we can add any objects.

Destination Bucket

The destination bucket is also created with a similar configuration as the source bucket. Only the name differs. Now we have our source and destination buckets ready.

Replication Rule

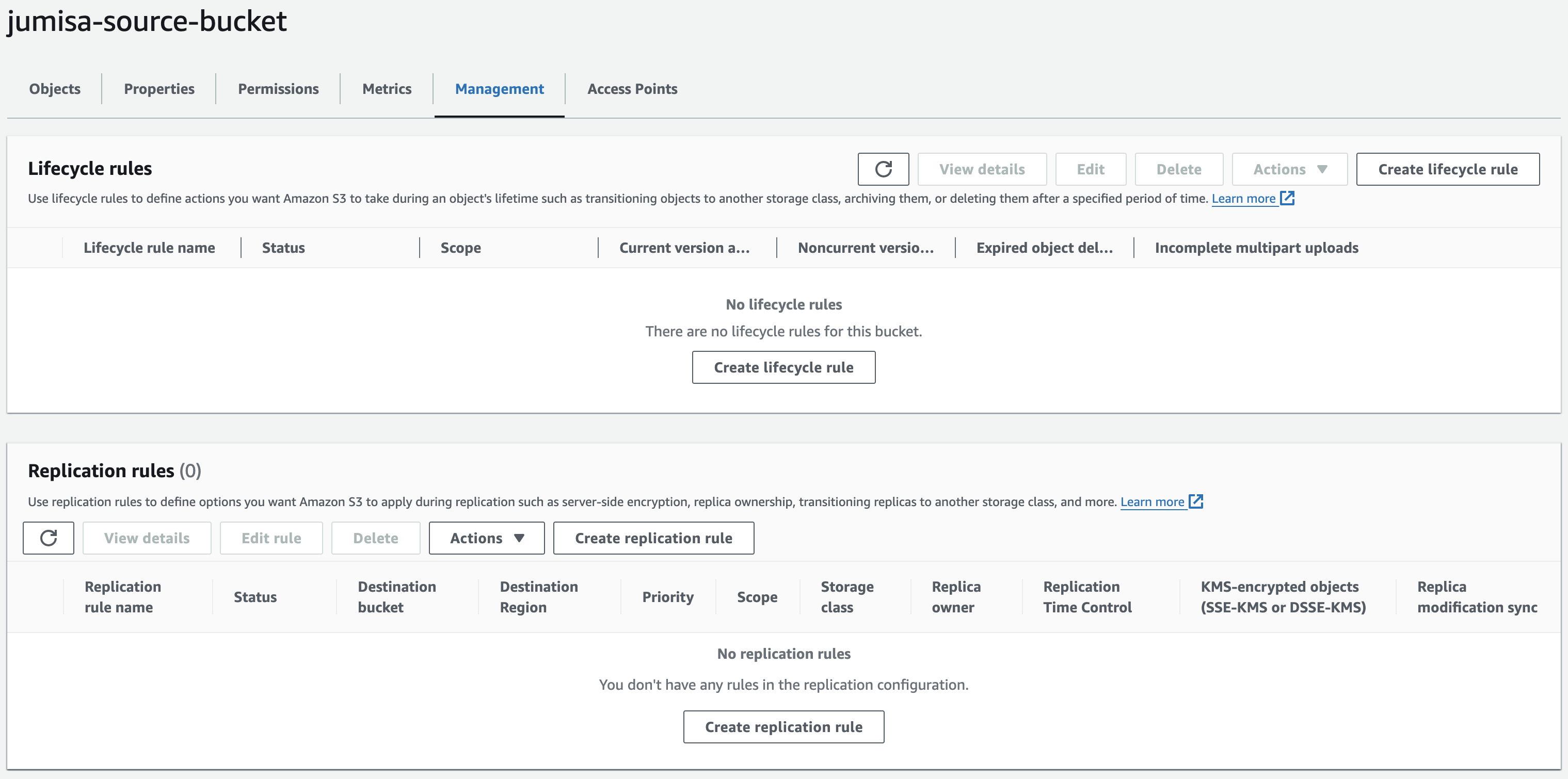

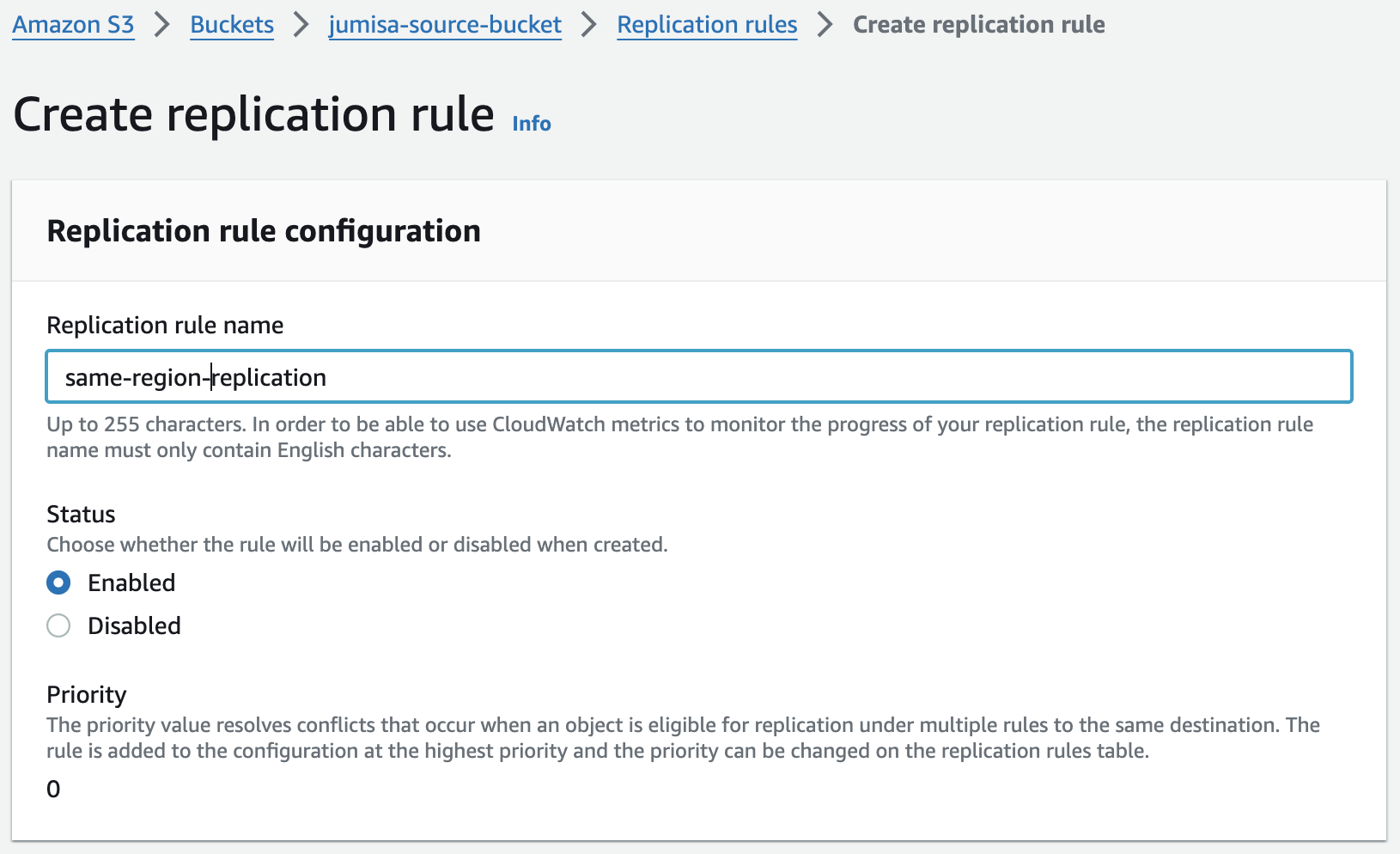

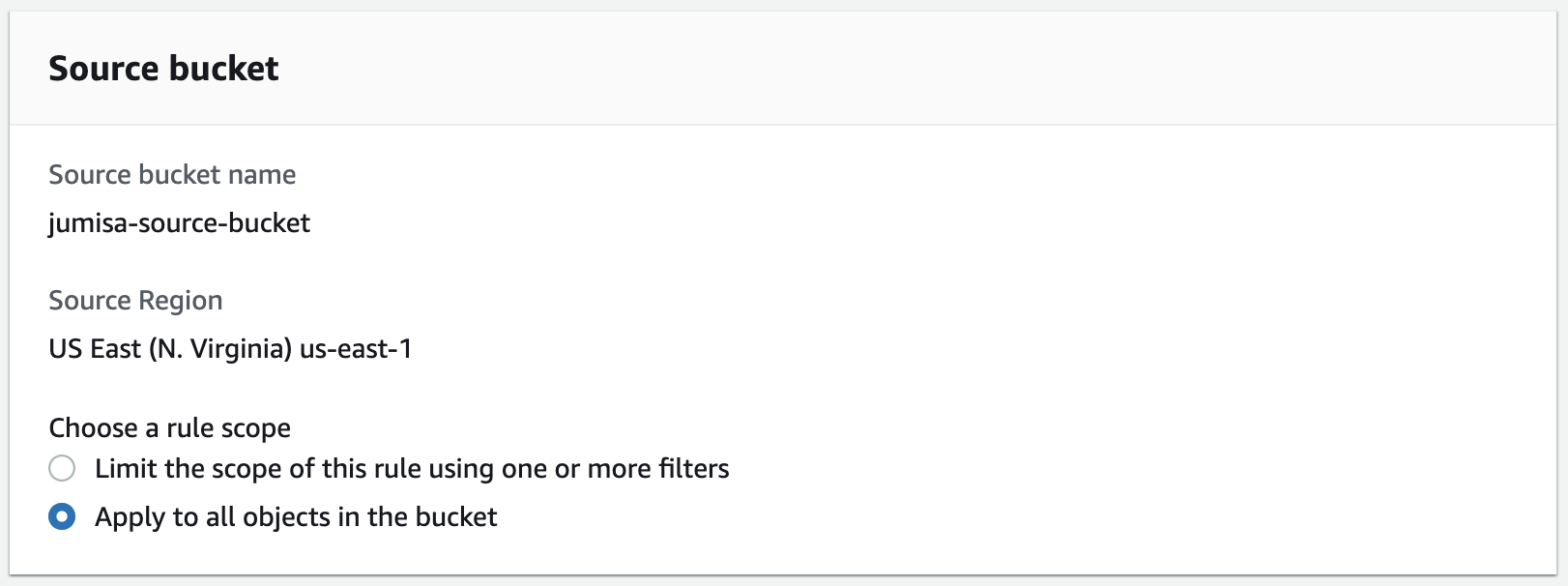

- The replication configuration must be created in the source bucket. In the management tab of the source bucket, we can create a replication rule.

- We can Choose whether the rule will be enabled or disabled when created. In this example, we choose to enable it.

- The replication rule can be applied to selected objects filtering the objects by prefix, object tags, or a combination of both.

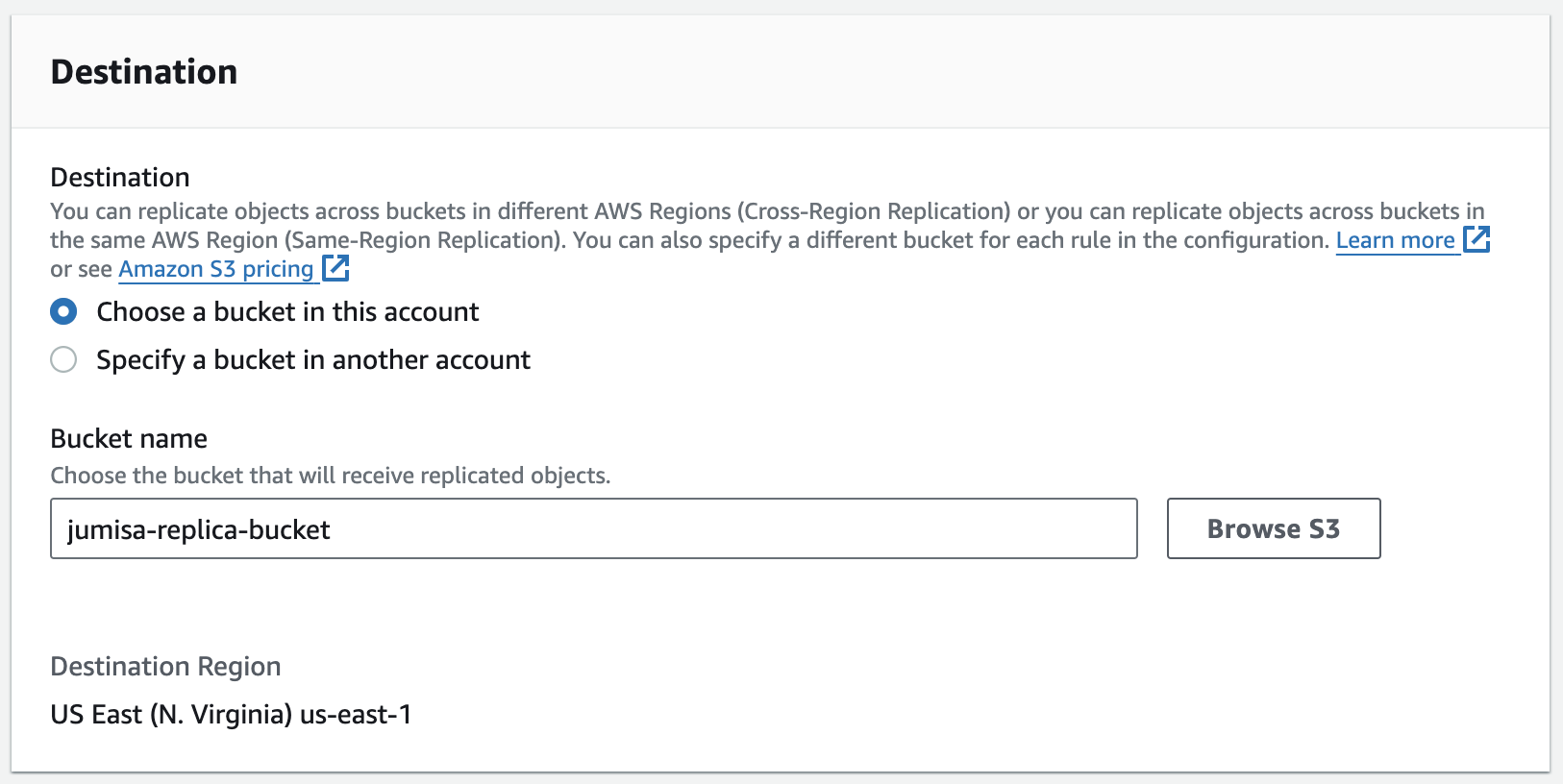

- The destination bucket can be chosen same or different account. In this case, we chose the destination bucket which we already created.

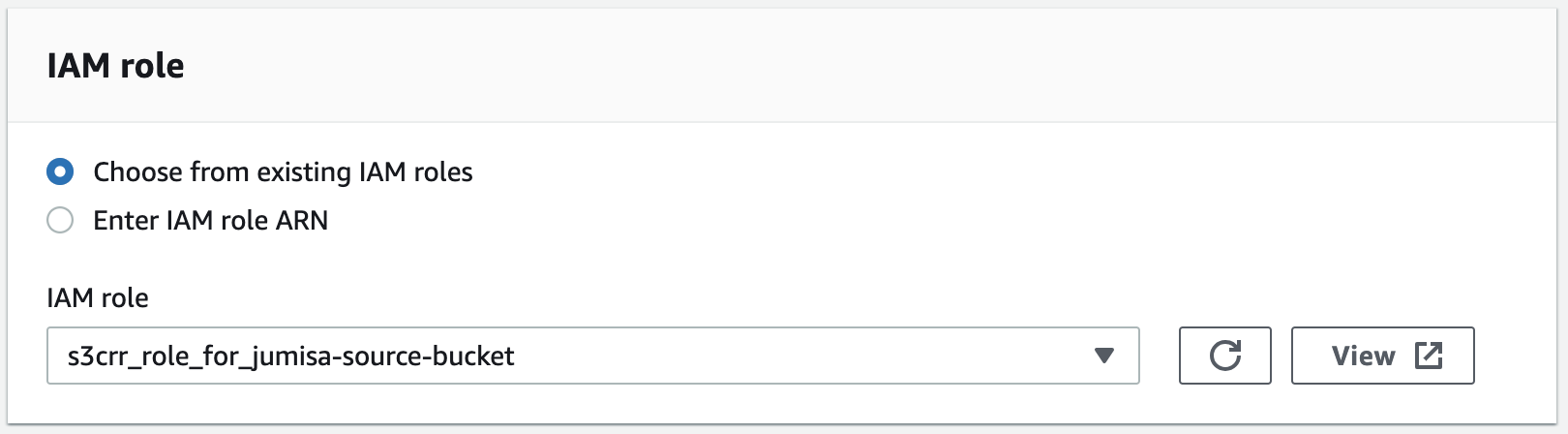

- An IAM role must be created and attached to the replication rule which has permissions on both the source and destination bucket. We can also choose to create a new rule. In either case, the JSON of the IAM role must look like below.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:ListBucket",

"s3:GetReplicationConfiguration",

"s3:GetObjectVersionForReplication",

"s3:GetObjectVersionAcl",

"s3:GetObjectVersionTagging",

"s3:GetObjectRetention",

"s3:GetObjectLegalHold"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::jumisa-source-bucket",

"arn:aws:s3:::jumisa-source-bucket/*",

"arn:aws:s3:::jumisa-replica-bucket",

"arn:aws:s3:::jumisa-replica-bucket/*"

]

},

{

"Action": [

"s3:ReplicateObject",

"s3:ReplicateDelete",

"s3:ReplicateTags",

"s3:ObjectOwnerOverrideToBucketOwner"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::jumisa-source-bucket/*",

"arn:aws:s3:::jumisa-replica-bucket/*"

]

}

]

}

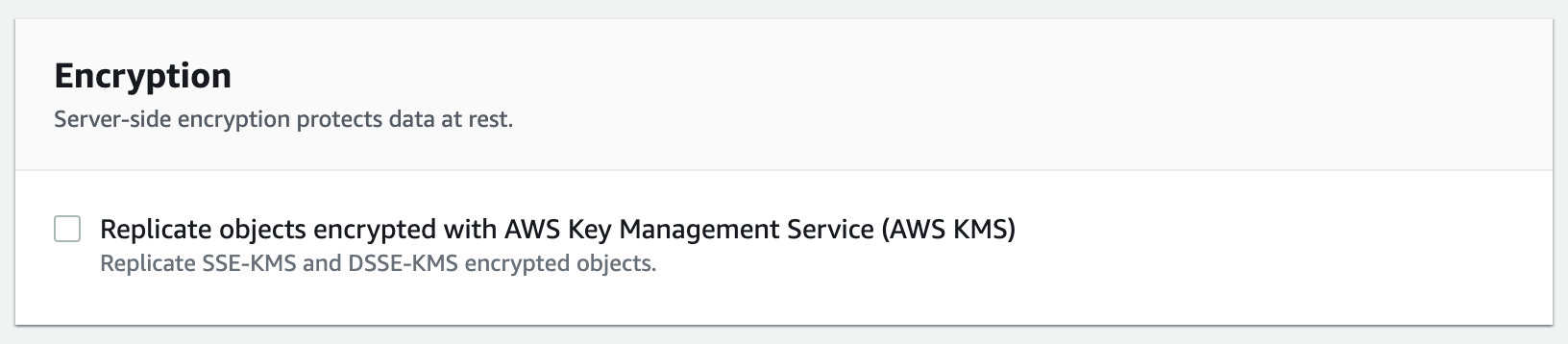

- If the objects in the source buckets are encrypted and if those objects are to be replicated, we must choose the same encryption key in the replication rule so that replicated objects in the destination can be decrypted.

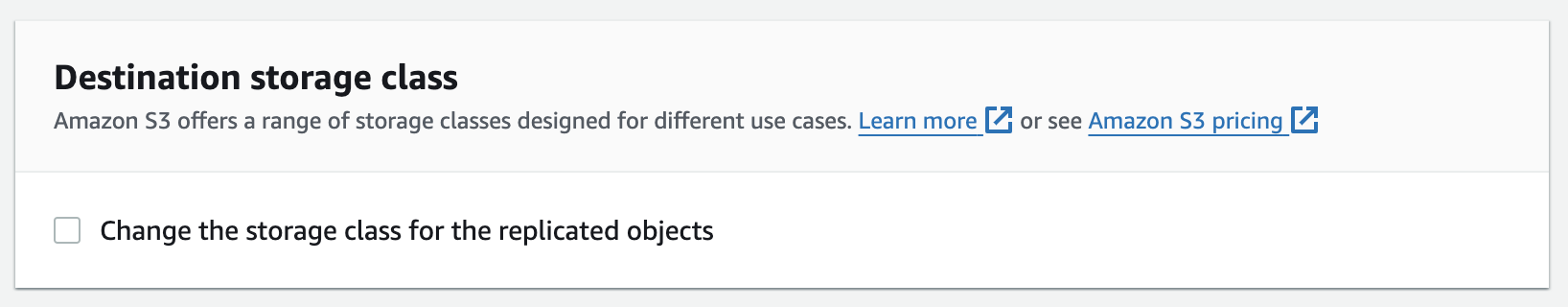

- By default, the storage class of the replicated objects in the destination bucket will be the same as in the source bucket. But this can be changed among various storage classes available in S3 such as Standard, Intelligent-Tiering, Standard-IA, etc.,

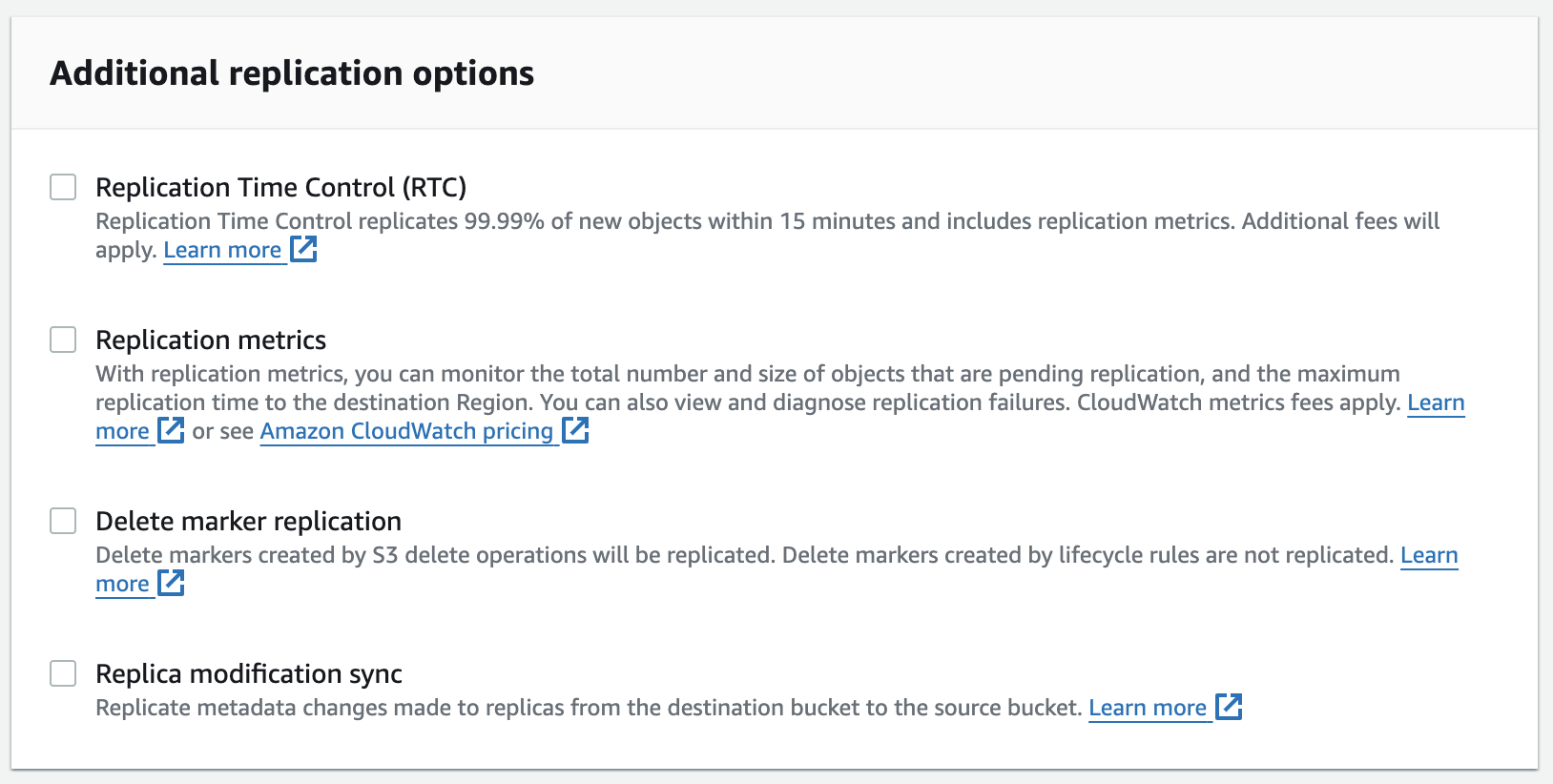

In addition to the above configurations, there are a few more optional configurations that can be used to alter the properties of the replicated objects.

Replication Time Control replicates 99.99% of new objects within 15 minutes and includes replication metrics.

With Replication Metrics, you can monitor the total number and size of objects that are pending replication and the maximum replication time to the destination Region. You can also view and diagnose replication failures.

Delete Markers created by S3 delete operations will be replicated. Delete markers created by lifecycle rules are not replicated.

The Replica Modification Sync option is used to replicate metadata changes made to replicas from the destination bucket to the source bucket.

In our case, we are currently not using any of these options and leaving the default configuration untouched.

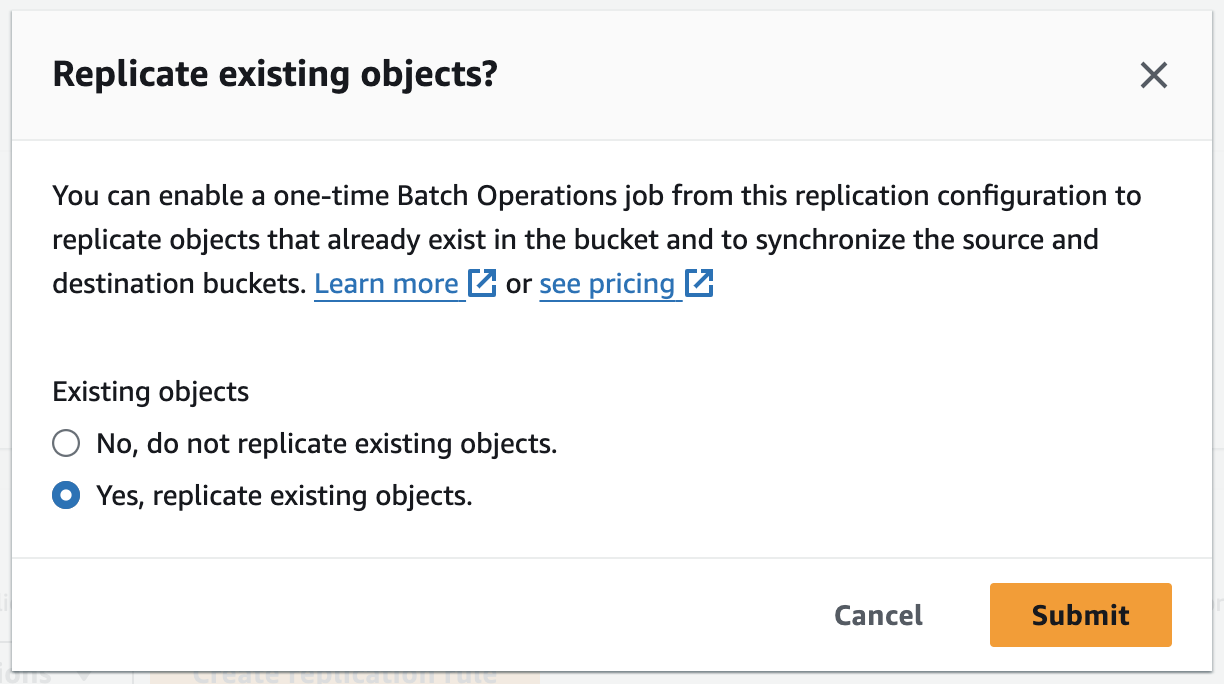

If there are any objects available in the S3 bucket before creating the replication rule, we can choose to run a one-time Batch Operations job from this replication configuration to replicate those objects to synchronize the source and destination buckets.

If we choose to replicate the existing objects, we will be redirected to the Create Batch Operations job widget.

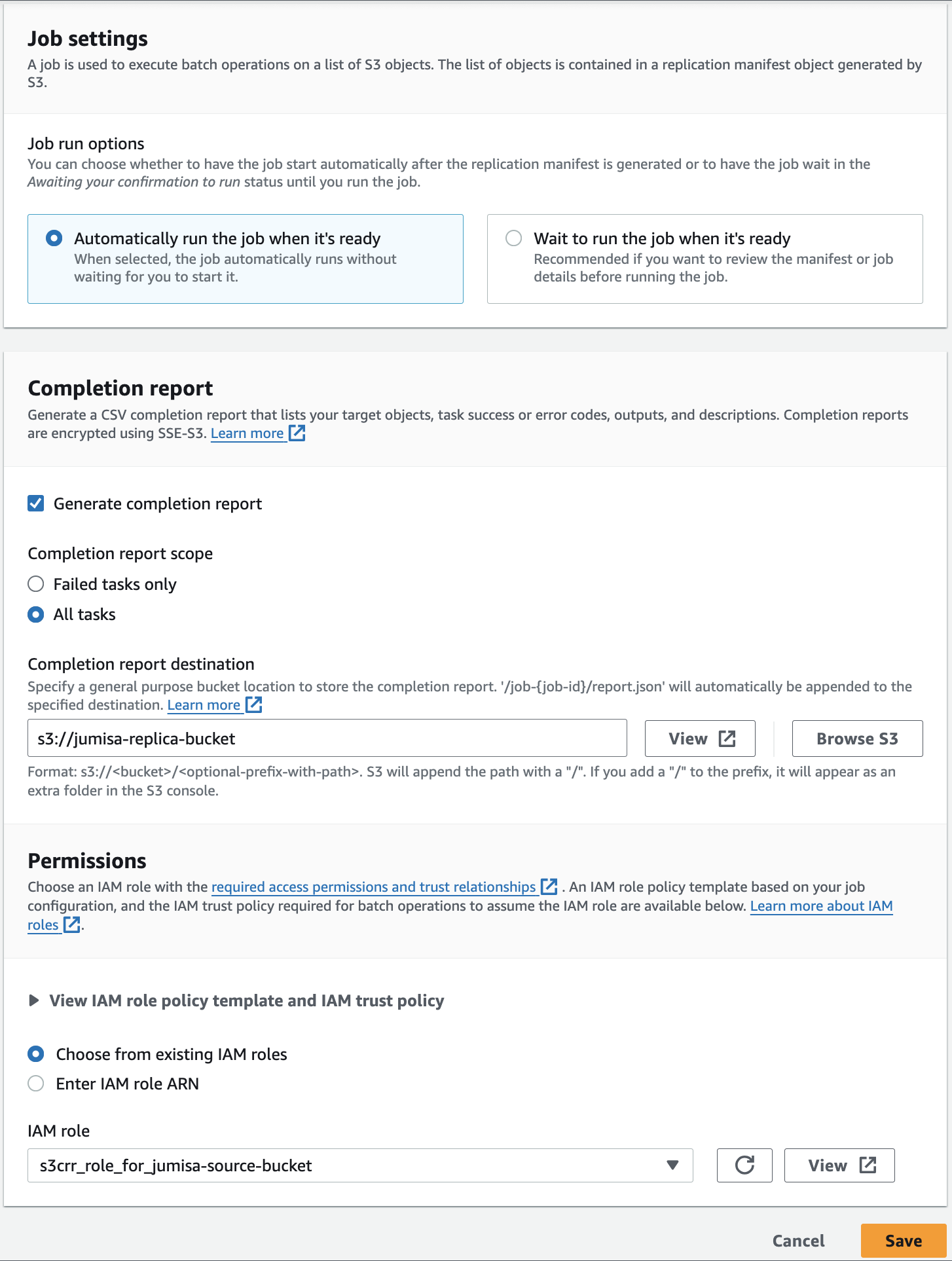

The batch jobs can be configured to run automatically when it is created, or we can trigger it manually.

We can also generate a completion report and store it in a location to see if the replication works seamlessly or not. If the replication is failing this report will have a detailed log with which we can identify the error and fix it.

We can store this report in either of our existing buckets (source or destination) or a new bucket can be created to store the report. Since this job will be run only once just to verify the replication configuration, we need not create a separate bucket for the completion report. If the report is stored in the source bucket, then it will also be replicated to the destination bucket. To avoid this, we can choose the destination bucket as the path to store the completion report.

To add permissions for this job, we use the same IAM policy s3replicate_for_jumisa-source-bucket_c3e5eb. The JSON of the policy will be as follows:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:InitiateReplication",

"s3:GetReplicationConfiguration",

"s3:PutInventoryConfiguration"

],

"Resource": [

"arn:aws:s3:::jumisa-source-bucket",

"arn:aws:s3:::jumisa-source-bucket/*"

]

},

{

"Effect": "Allow",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::jumisa-replica-bucket/*"

}

]

}

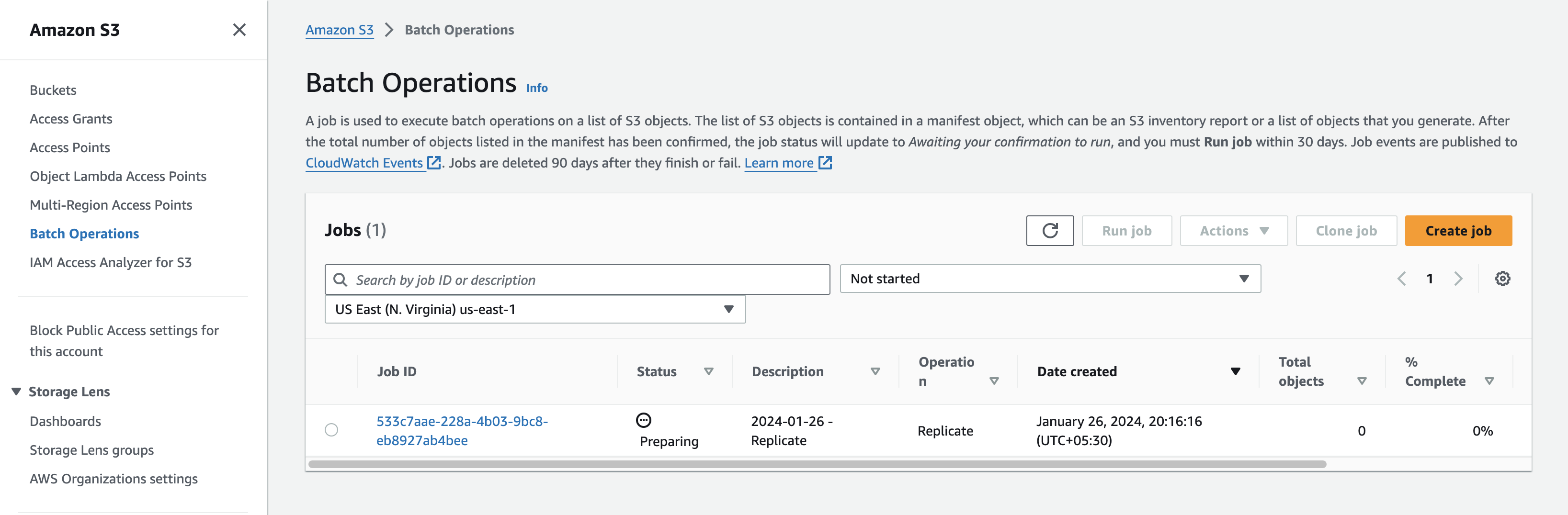

The status of the Batch operation can be monitored as shown below.

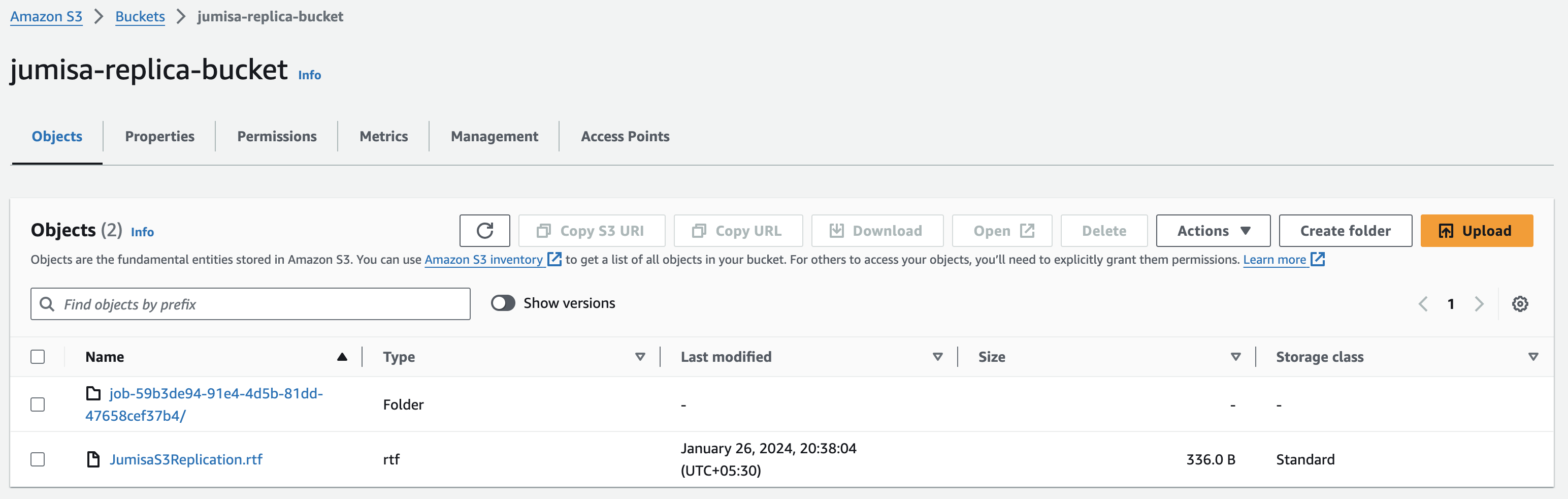

Once the job is completed, we can see the objects from the source bucket replicated into the destination bucket.

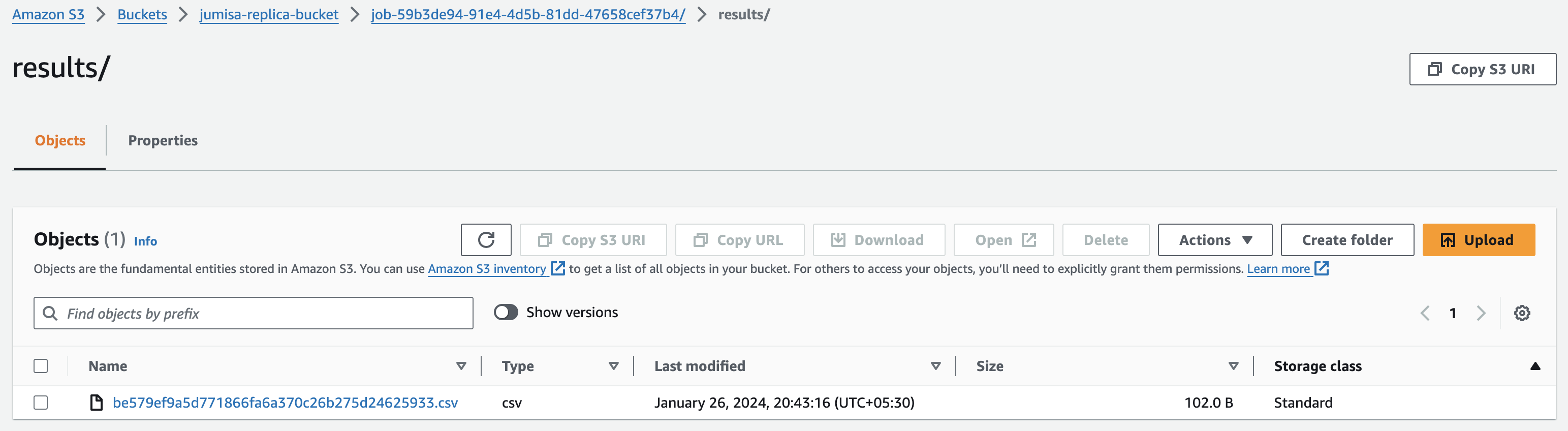

Along with the replicated object, we can also see the completion report of the batch operation job in the destination bucket.

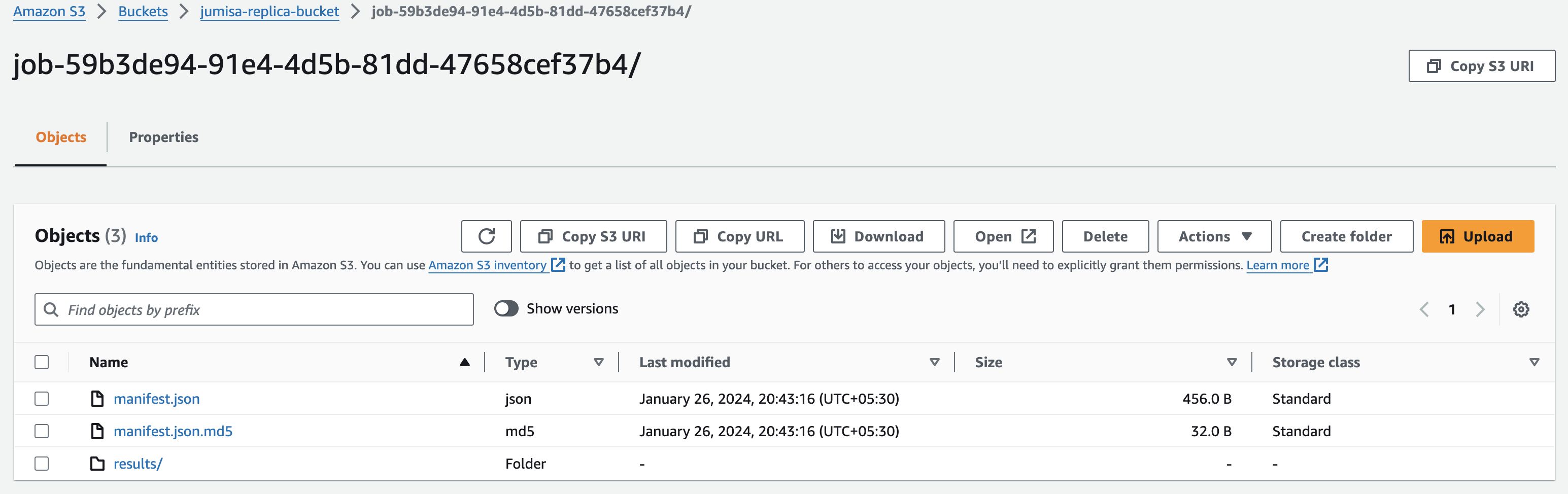

This report includes the manifest.json files and the actual report in a CSV file as shown below.

The manifest.json file contains the details of the job report such as the report format, report creation time, job status, bucket name, and path where the report file is stored in the bucket.

{

"Format": "Report_CSV_20180820",

"ReportCreationDate": "2024-01-26T15:13:15.420725987Z",

"Results": [{

"TaskExecutionStatus": "succeeded",

"Bucket": "jumisa-replica-bucket",

"MD5Checksum": "bb709a6d3f01e943d88adce9c88192da",

"Key": "job-59b3de94-91e4-4d5b-81dd-47658cef37b4/results/be579ef9a5d771866fa6a370c26b275d24625933.csv"

}],

"ReportSchema": "Bucket, Key, VersionId, TaskStatus, ErrorCode, HTTPStatusCode, ResultMessage"

}

We have discussed here about how a replication between two S3 buckets in the same account and same region.

This is not the limit where the replication can be stopped.

There are a lot more types of replications possible as listed earlier in the article.

We highly appreciate your patience and time spent reading this article.

Stay tuned for more Content.

Happing reading !!! Let us learn together !!!