Table of Contents

Use case:

A Business that needs to share its private resources/services from one AWS network to another and has some constraints such as

Entire communication must be private i.e. within AWS Network and not leveraging to public/open network

Specifically to share only the resource/service, not the entire network

The Business wants to share one of its data sources with a data lake cluster hosted in the same region, but a different AWS account for data analytics purposes.

Let us consider an example for this business case, Data Lake (Kylo or Dremio) is hosted in N. Virginia of an AWS Account and a MySQL data source is hosted in the same region but on a private network of a different account. The business wants to share its MySQL data source with the data lake but does not want to allow access to any other resources/services.

Proposed solution:

This requirement cannot be achieved through VPC Peering or by using Transit Gateway as the CIDR ranges of both the VPCs are the same. The connection can be made possible by using AWS Private Link.

In the solution discussed below, I have used multiple VPCs in the same account. The change required to establish a private link connection across multiple accounts is also mentioned.

Below are the steps to be followed to set up an AWS private link between two VPCs.

- Create a database VPC and a data lake VPC with the same IP CIDRs.

- Create an AWS MySQL RDS in the private subnet of database VPC.

- Create a network load balancer in the private subnet of the database VPC.

- Add the target group to the NLB which points to the private IP of the RDS.

- In the same database VPC, create a VPC Endpoint Service and attach the NLB to it.

- Create an EC2 instance in the data lake VPC.

- Create a VPC Endpoint in the data lake VPC and attach the VPC Endpoint Service from the database VPC.

STEP 1: Create the VPCs:

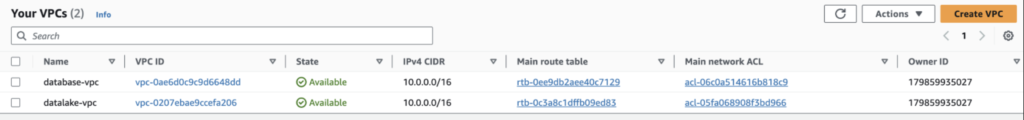

The database-vpc and the datalake-vpc have the same IPv4 CIDR as shown in the image below.

STEP 2: Create the RDS:

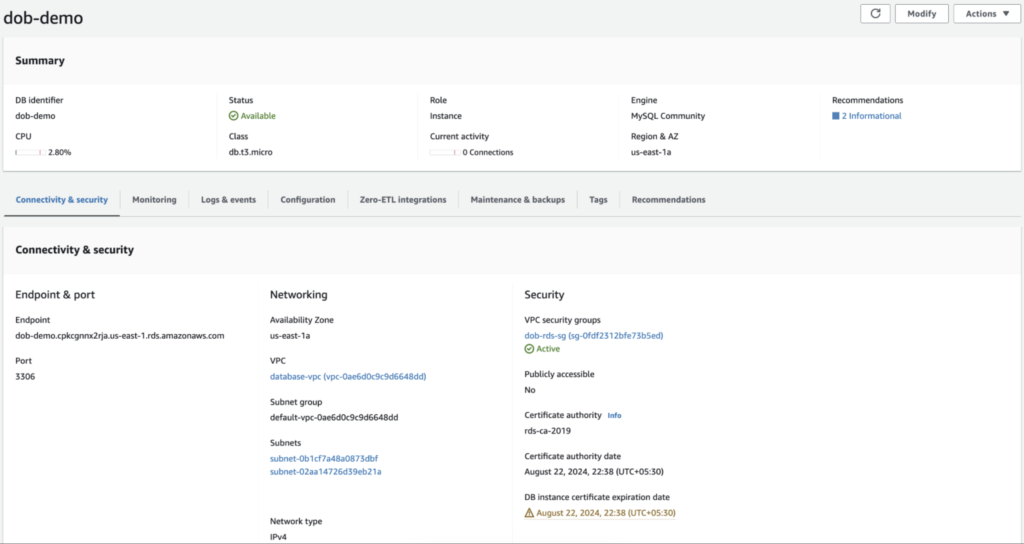

The MySQL RDS dob-demo is created in the database-vpc with a private subnet group as shown in the image below.

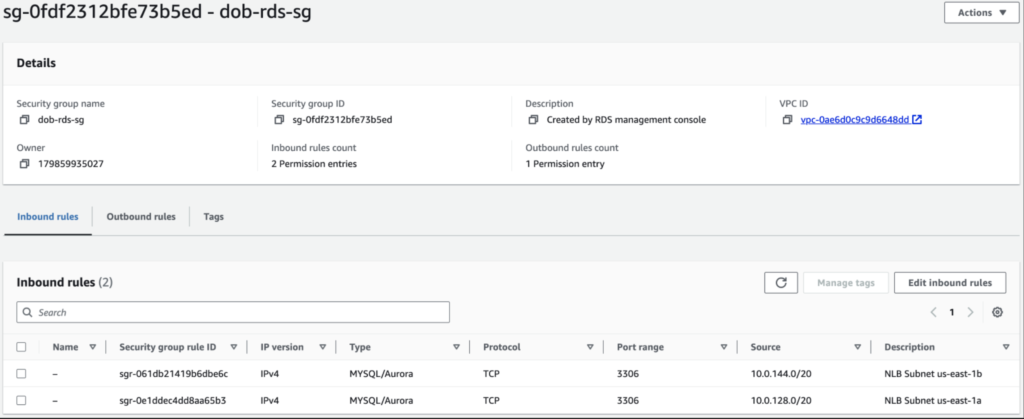

The security group for the RDS must have the inbound rules to allow MySQL port 3306 from the NLB subnets as shown in the image below and the default outbound rule.

STEP 3: Create the NLB:

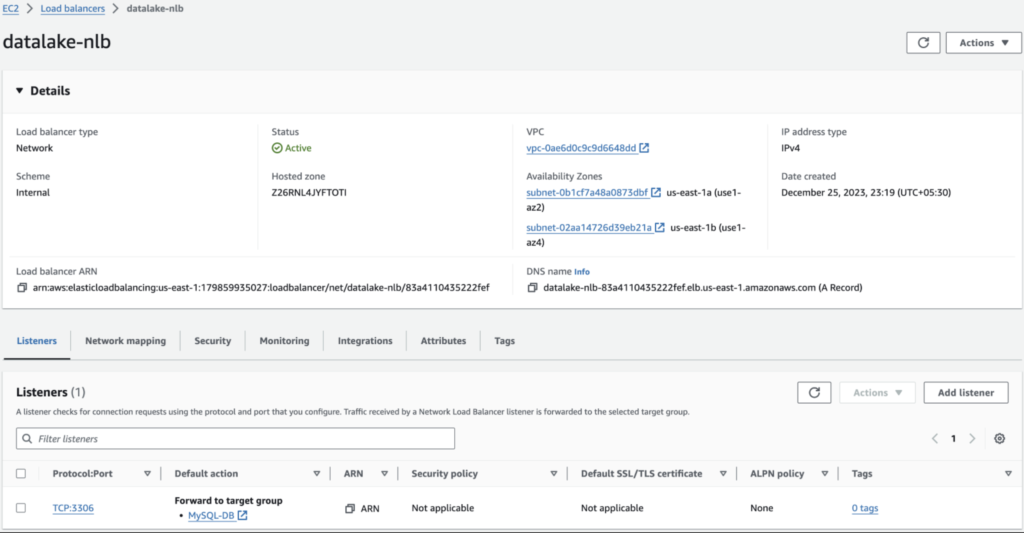

Create a network load balancer datalake-nlb with the listener rule to route all traffic that comes through port 3306 to the target group MySQL-DB as shown in the image below.

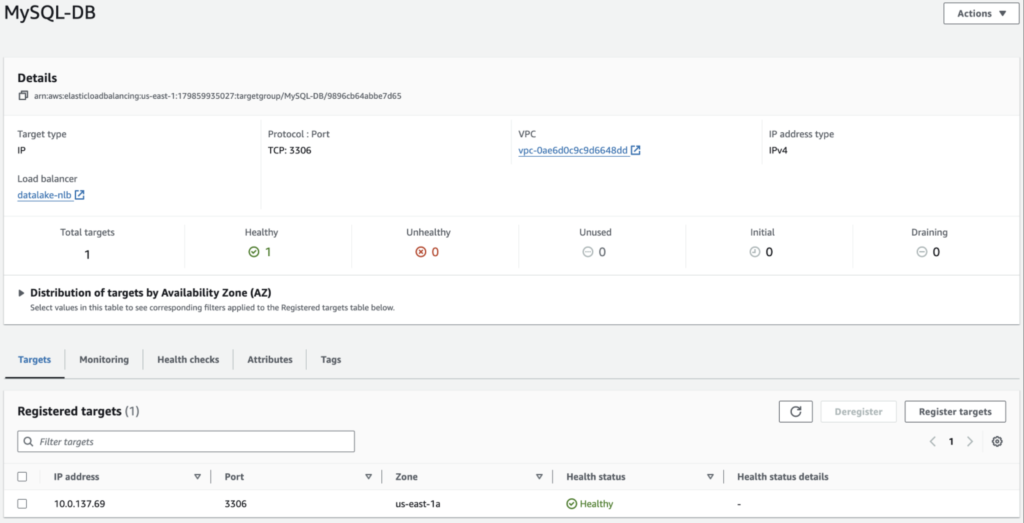

STEP 4: Add the RDS IP to the target group:

AWS provides us with only the DNS of the RDS instance but not the IP address. However, in the target group, we can attach only the IP, not the DNS.

The IP address of the RDS instance can be obtained by using a telnet command from a jump host. Hence, we need to create an EC2 instance in a public subnet of the database-vpc which will be used temporarily to obtain the IP of the RDS.

NOTE:

This workaround is used because the RDS instance is not going to be rebooted in this case. As per AWS documentation, the IP address of the RDS will change post every re-boot but the DNS will not change. So, when there is a possibility that the IP address of the RDS may change, we need to use a lambda function which is triggered whenever the RDS is restarted to obtain the new IP and update it in the target group.

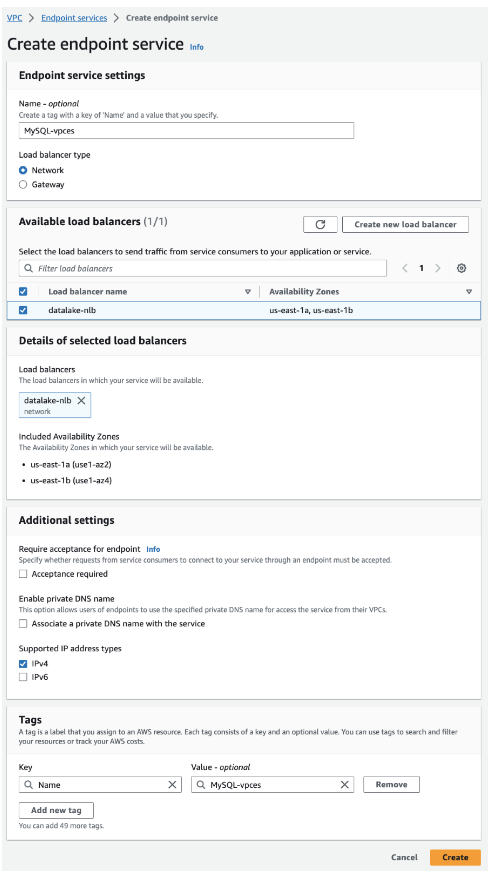

STEP 5: Create the VPC Endpoint Service:

The VPC Endpoint can be linked to other AWS resources by connecting to a Gateway load balancer or a Network load balancer. In our case, we use the NLB which we created in the previous step.

For the supported IP address type, we can choose IPv4.

STEP 6: Create the data lake EC2 instance:

In the private subnet of the datalake-vpc, create an EC2 instance where the data lake will be installed. Add a security group to the EC2 instance which allows inbound traffic from within the VPC. Kylo data lake uses TCP port 61616, and Dremio data lake uses TCP port 5432. Depending on the data lake used, the respective port must be whitelisted on the Security group.

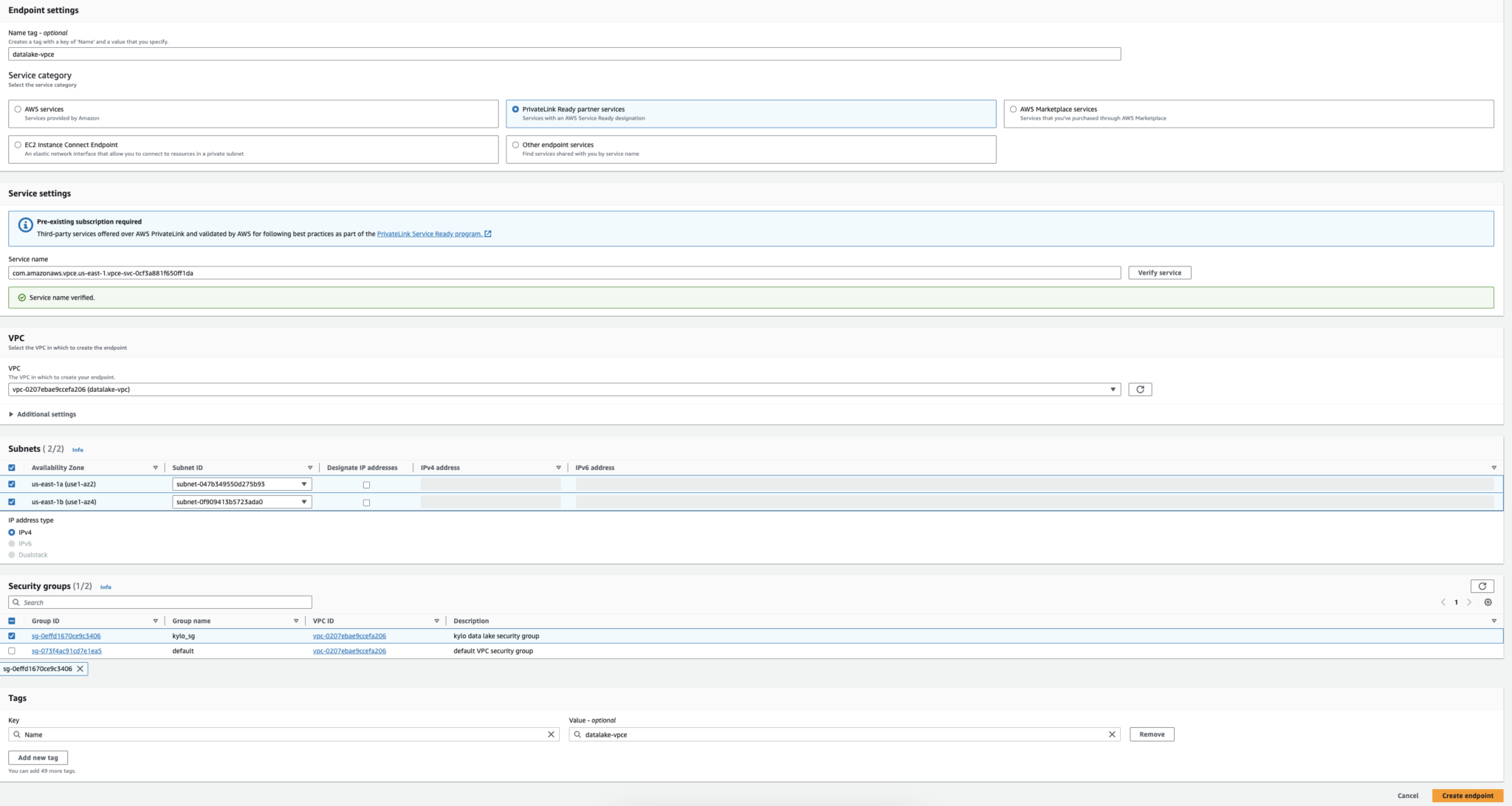

STEP 7: Create the VPC Endpoint:

There are three types of VPC endpoints – Interface endpoints, Gateway Load Balancer endpoints, and Gateway endpoints. Interface endpoints and Gateway Load Balancer endpoints are powered by AWS Private Link and use an Elastic Network Interface (ENI) as an entry point for traffic destined to the service. Interface endpoints are typically accessed using the public or private DNS name associated with the service, while Gateway endpoints and Gateway Load Balancer endpoints serve as a target for a route in your route table for traffic destined for the service.

The service category ‘Private link Ready partner services’ must be selected to enable communication between the endpoint in this VPC and the endpoint service on the other VPC.

In the service settings, the service name of the VPC Endpoint Service which we created in step 5 is used to verify the service connectivity.

The VPC and availability zones are selected in such a way that the EC2 instance is running in one of those zones.

The IP address type is IPv4 and the security group which is used for the EC2 is applied for the endpoint also.

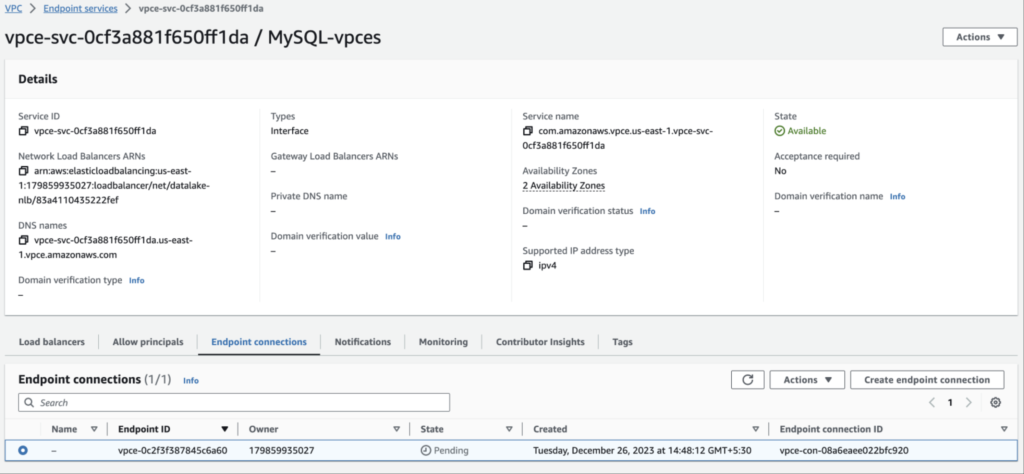

Once the VPC Endpoint is created the status will be pending until the endpoint connection is accepted manually from the ‘Endpoint connections’ tab of the VPC endpoint service.

NOTE: This must be sufficient for a Private link set up across multiple VPCs in the same AWS account. But, If the private link is to be set up across different AWS accounts, the Allow principal must be added in the VPC Endpoint service which mentions the AWS account details.

Testing the Private Link set up:

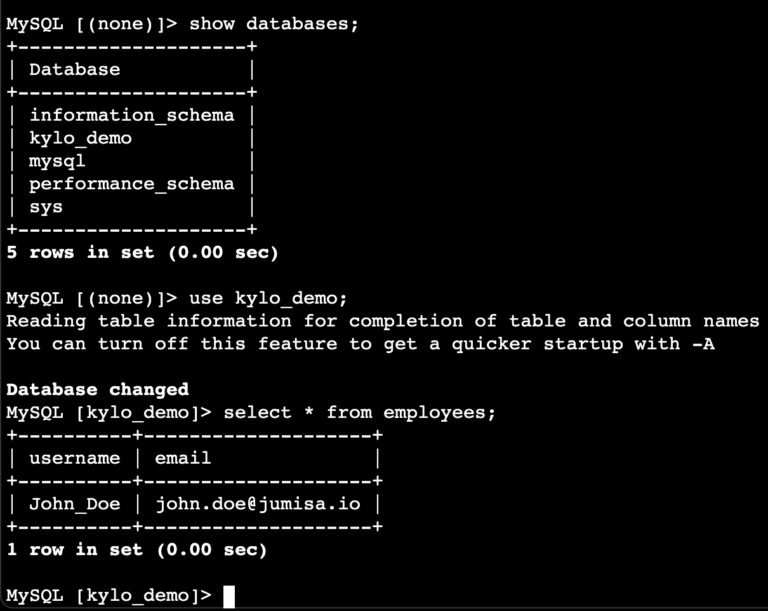

Once all the above-mentioned steps are completed, to check the setup, we will now create a database, add a table and insert some values into the MySQL RDS.

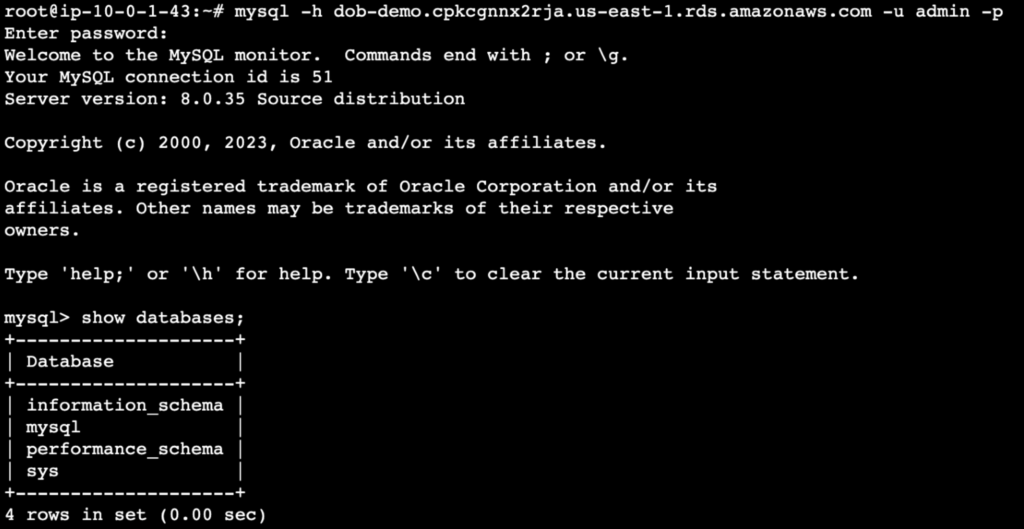

- Login to the MySQL RDS using the RDS endpoint from the temporary EC2 jump host and list the DB

$ mysql -h -u -p

mysql> show databases;

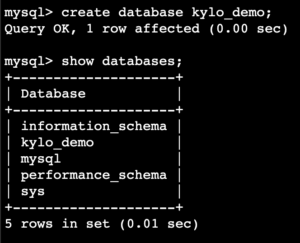

- Create a database of your choice. In this example, I have named the database kylo_demo.

mysql> create database ;

mysql> show databases;

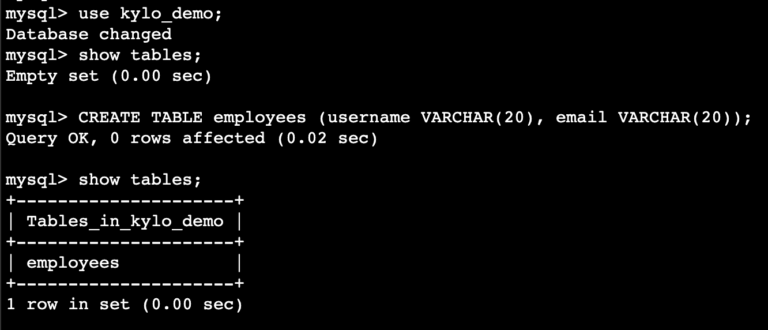

- Create a table of your choice in the database that we just created. I have named the table employees and included two fields namely username and email.

mysql> use :

mysql> show tables;

mysql> create table <table> ( varchar(20), varchar(20));

mysql> show tables;

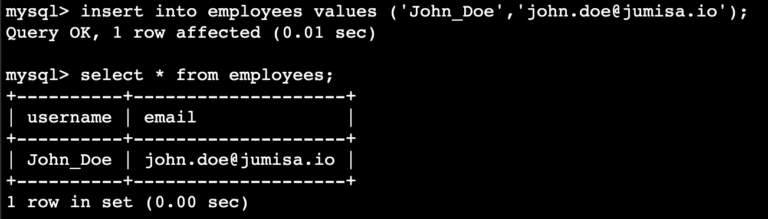

- We can now add an entry to the table and query the same.

mysql> insert into <table> values ('','');

mysql> select * from <table>;

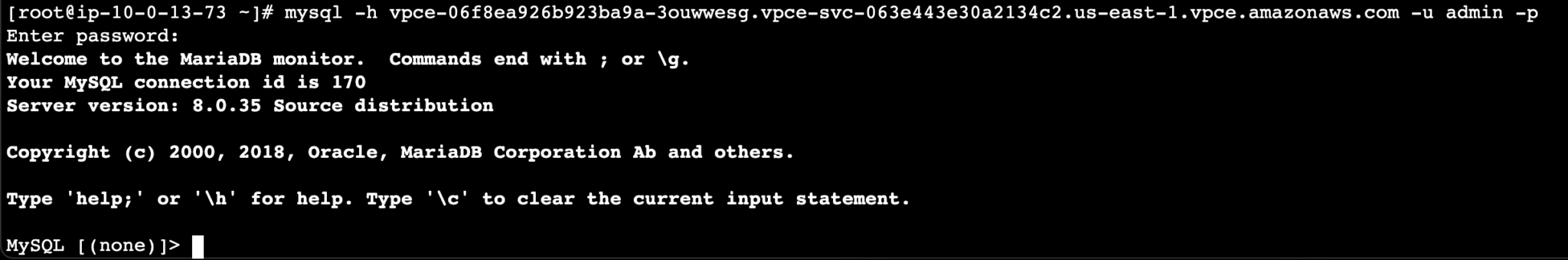

- Now from the EC2 instance which is created in the datalake-vpc, login to the MySQL RDS using the vpc-endpoint.

$ mysql -h -u -p

- Check if we can see the changes made from the database instance are updated in the RDS.

mysql> show databases;

mysql> use ;

mysql> select * from <table>;